ADAS & AUTONOMOUS VEHICLE TECHNOLOGY EXPO AND CONFERENCE RETURNS TO CALIFORNIA!

Join us in Silicon Valley in August to help enable the future of mobility.

It’s the leading expo and conference for every aspect of ADAS and autonomous vehicle testing, development and validation technologies, taking place every year in California and Europe.

At the free-to-attend exhibition, visitors can see, source, trial and test next-generation technologies and solutions from specialist suppliers at the forefront of ADAS and autonomous vehicle development, from the big players to the startups.

The end-to-end ADAS/AV ecosystem will be on display to help accelerate your test and validation programs, whether that’s developing new vehicles, robotaxis, off-highway vehicles, last-mile delivery, autonomous trucks, social mobility, microbility or autonomous shuttles. See simulation and software, testing tools, AI, sensors and sensor fusion, data acquisition and management, vehicle positioning and localization, V2X and 5G communications among others to enable and accelerate end-to-end autonomous and ADAS applications.

Plus, the two-day conference brings together world-leading experts in the fields of autonomous vehicle research, AI, software, sensor fusion, AV testing, validation, development, standards and safety. Hear best practices and strategies from international OEMs, Tier 1 suppliers, research and development centers and innovative transportation startups concerning the development and testing of safe autonomous driving and ADAS technologies.

Join us in Silicon Valley this September to help enable the future of mobility.

What to expect

- Two days of live demonstrations

See what’s happening on the market and the latest technologies that will significantly improve vehicle safety, enhance your products and set your brand apart. - Speed up your decision making

Implement time and cost-saving solutions in your test, development and validation programs. - Accelerate software-defined vehicle development

Source next-gen componentry, technologies and solutions to accelerate software-defined vehicle development.

- See the end-to-end ADAS/AV ecosystem on display

Dataloggers, precise localization, AD simulation and validation, sensors/systems, artificial intelligence, wireless communications (5G, V2V, V2X), testing tools, test tracks/facilities and more. - Meet new and existing specialist suppliers in one venue

Speak to industry experts from Aimotive, ASAM, Continental Tire the Americas, Keysight Technologies, Deepen AI, Business Sweden, dSPACE, Oxford Technical Solutions, Siemens, Rohde & Schwarz, AB Dynamics/DRI and more from the big players to the startups. - The two-day conference

The conference brings together world-leading experts in autonomous vehicle research, AI, software, sensor fusion, AV testing, validation, development, standards and safety.

80+ speakers from international OEMs, Tier 1 suppliers, R&D centers and innovative transportation startups will share best practices and innovative strategies for the latest ADAS/AV testing protocols, procedures and solutions.

Hear from IBM, Volvo Cars, Torc Robotics, Tier IV, The Goodyear Tire & Rubber company, Argonne National Laboratory and more.

Gallery

Check our gallery of pictures from recent events

Some of the Products on Show

Conference Highlight: New lidar scanner from Omnitron Sensors addresses critical bottleneck in lidar technology

ADAS & Autonomous Vehicle Technology Conference

Conference highlight: Adastec provides progress report on autonomous mass transit

ADAS & Autonomous Vehicle Technology Conference

Conference highlight: Zoox discusses the future of radar

ADAS & Autonomous Vehicle Technology Conference

AVSandbox – sensor realistic simulation environment

Claytex

Conference Highlight: Panel discussion – Safety validation for highly automated driving

ADAS & Autonomous Vehicle Technology Conference

Foretellix addresses the biggest challenge for achieving safe, large-scale commercial autonomous driving

Foretellix

3DAI City – AI-generated live maps transforming urban and highway management

Univrses

Collaboration by standardization

ASAM

WHAT YOU WILL SEE AT THE EXPO

- ADAS (pedestrian targets, datalogging, GNSS positioning, testing, validation, sensor fusion)

- Connectivity (data, wireless, 5G networks, in-vehicle)

- Cybersecurity

- Legislation

- AI and sensor fusion(Nvidia, synthetic data, raw data sensor fusion)

- Simulation (digital twins, sensor performance, synthetic data, environmental test chambers, XIL, VIL, MIL, SIL, HIL)

- Mapping

- Positioning (GNSS, sensor calibration)

- Safety

- Validation and Testing (MIL, SIL, DIL, VIL, HIL, signal testing, sensors, ADAS, simulation)

- Sensors (camera, radar, lidar, perception, GPS)

- V2X (vehicle to everything)

- Datalogging, acquisition and connectivity (cables and harnesses, video cameras, CPU acceleration cards, sensors)

- Mobility solutions

AND MORE!

Book a booth

For further details, please contact:

Event director

Chris RichardsonPhone number

CONFERENCE INFORMATION

DEVELOPING AN AUTONOMOUS VEHICLE?

THIS IS THE CONFERENCE YOU SHOULD ATTEND!

Hear from over 50 expert speakers discussing the key topics concerning the development and testing of safe autonomous driving and ADAS technologies, including software, AI and deep learning, sensor fusion, virtual environments, verification and validation of autonomous systems, testing and development tools and technologies, real-world testing and deployment, and standards and regulations.

Topics are likely to include:

- Strategies, innovations and requirements for the safe deployment of ADAS and autonomous technologies

- Software, AI, architecture and data management

- Standards, regulations and law, and their impact on engineers and technology

- Advanced simulation and scenario-based testing

- Real-world test and deployment – lessons learned

- Sensor testing, development, fusion, calibration and data

First Speakers Announced

Check here for updates

Edward Schwalb

Schwalb Consulting llc

Evan Smith

Torc Robotics Inc.

Wei Lu

Didi Research America

Bernard Soriano

California Department of Motor Vehicles

Kanwar Bharat Singh

The Goodyear Tire & Rubber Company

Edward Straub

SAE-ITC

Fengxiang Qiao

Texas Southern University

Hakan Sivencrona

Volvo Cars

Rohan Chandra

The University of Texas at Austin

John Komar

Ontario Tech University

Miriam Di Russo

Argonne National Laboratory

Jongryeol Jeong

Argonne National Laboratory

Speaker Registration

Interested in speaking at the event?

If you would like to make a presentation at the ADAS & Autonomous Vehicle Technology Expo California 2024:

The organizer of ADAS & Autonomous Vehicle Technology Expo California 2024 invites you to suggest a presentation for inclusion in the conference taking place at the San Jose McEnery Convention Center on August 28 & 29, 2024.

Your presentation should provide a technical insight, share unique challenges and experiences, and discuss technical solutions, addressing the challenges and opportunities of developing AI and software platforms for autonomous and self-driving vehicles; AI/programming best practice; sensor fusion; and how to understand, use and manage big data.

We are also seeking presentations on AV testing, validation and development. Topics will include – but will not be limited to – testing in synthetic environments; HIL; real-world and open-road testing; HD mapping; lidar, radar and camera development; cyber-threat testing; V2V and V2X; embedded software testing; plus all important safety standards and legislation.

If you have worked on a relevant project, have an opinion you’d like to share with the industry or a theory you would like to discuss, please submit your presentation title and a 100-word abstract. Sadly, we can’t accommodate every submission but an early abstract or expression of interest in speaking will increase your chances of securing a presentation position within the program.

Please submit your proposal using the link below. Alternatively, if you would like to discuss your submission in more detail, please do not hesitate to contact me.

Please click below to submit your proposal

For further details, please contact:

Press Center

Welcome to the Press Center for ADAS & Autonomous Vehicle Technology Expo California 2024.

Event Marketing

The logos can be used to post the event details on a company website or calendar listing.

These event logos and banners have been provided for use by sponsors, speakers and conference delegates to share information on the event with friends and colleagues.

Breaking News

Harman Ignite platform introduced in Tata Motors’ passenger vehicles

Tata Motors, an Indian auto maker, has chosen Harman Ignite Store as its in-vehicle app store, aiming to expand accessible and reliable mobility experiences to more customers in a new region.

Rohde & Schwarz and IPG Automotive collaborate on hardware-in-the-loop automotive radar test solution

Technology company Rohde & Schwarz and software company IPG Automotive have introduced a complete hardware-in-the-loop (HIL) automotive radar test solution.

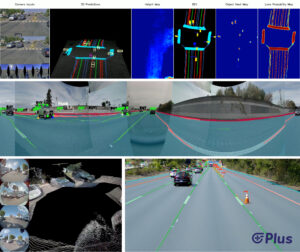

Plus introduces perception software modules for next-gen vehicles

Plus, a provider of autonomous driving software solutions, has launched PlusVision, an AI perception software designed to be used in advanced safety systems, ADAS applications and higher levels of autonomy.

Contact Us

Event director

Chris RichardsonPhone Number

registration / badge queries

Clinton CushionPhone Number

Event Venue

Event venue location info and gallery

San Jose McEnery Convention Center, USA

150 W San Carlos St,

San Jose,

CA 95113,

USA

For information regarding accommodation please click here.